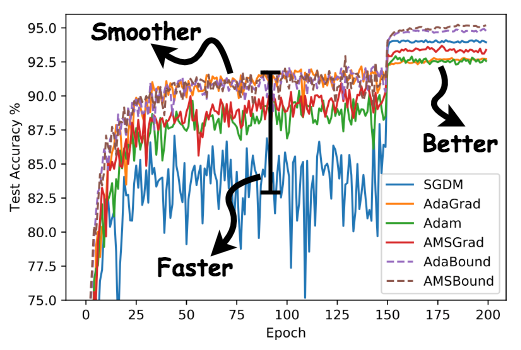

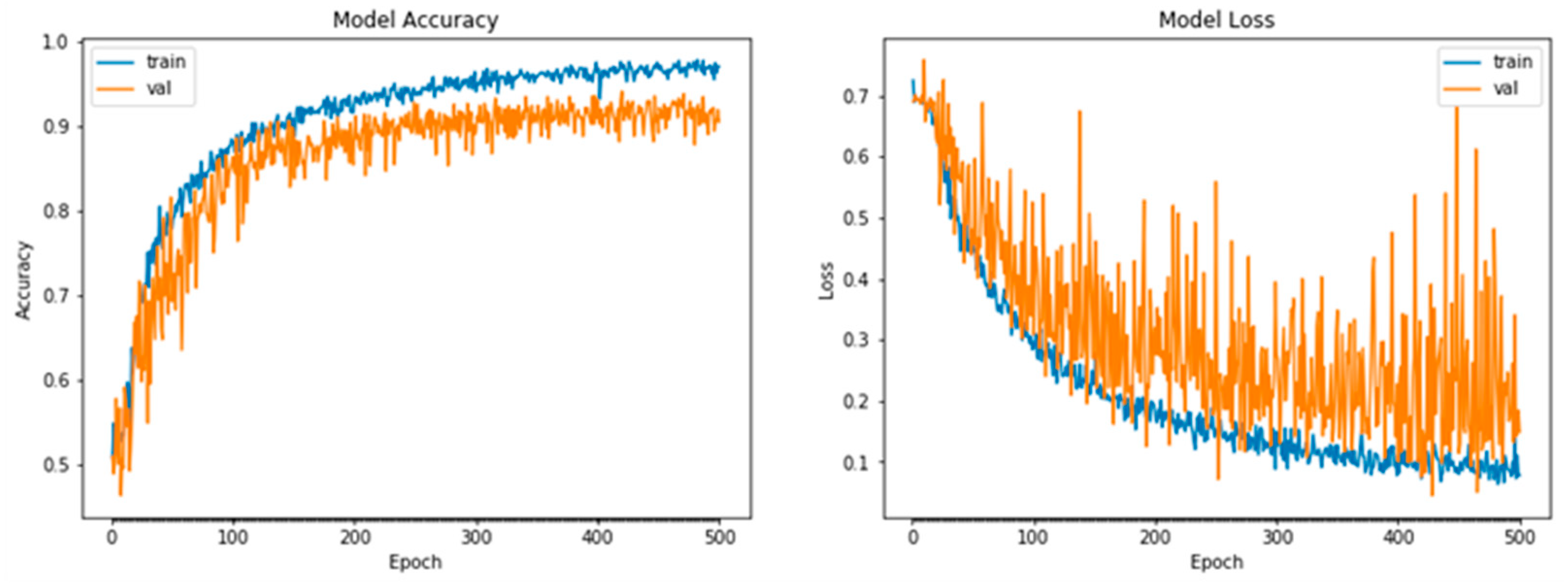

ICLR 2019 | 'Fast as Adam & Good as SGD' — New Optimizer Has Both | by Synced | SyncedReview | Medium

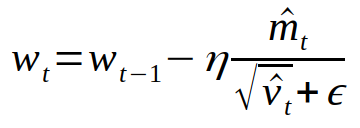

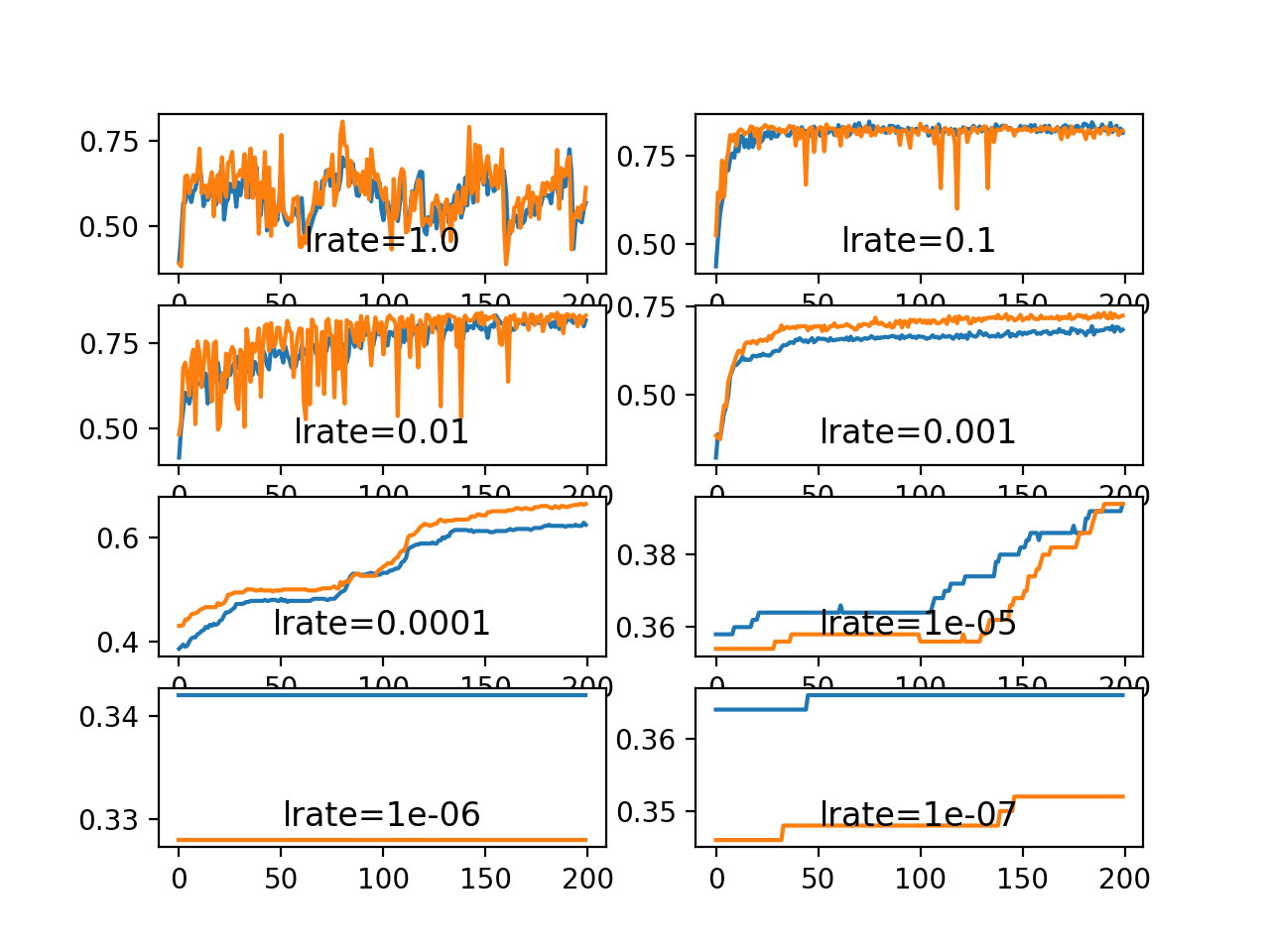

The training results with different optimizers and learning rates. (a)... | Download Scientific Diagram

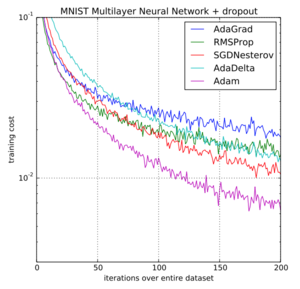

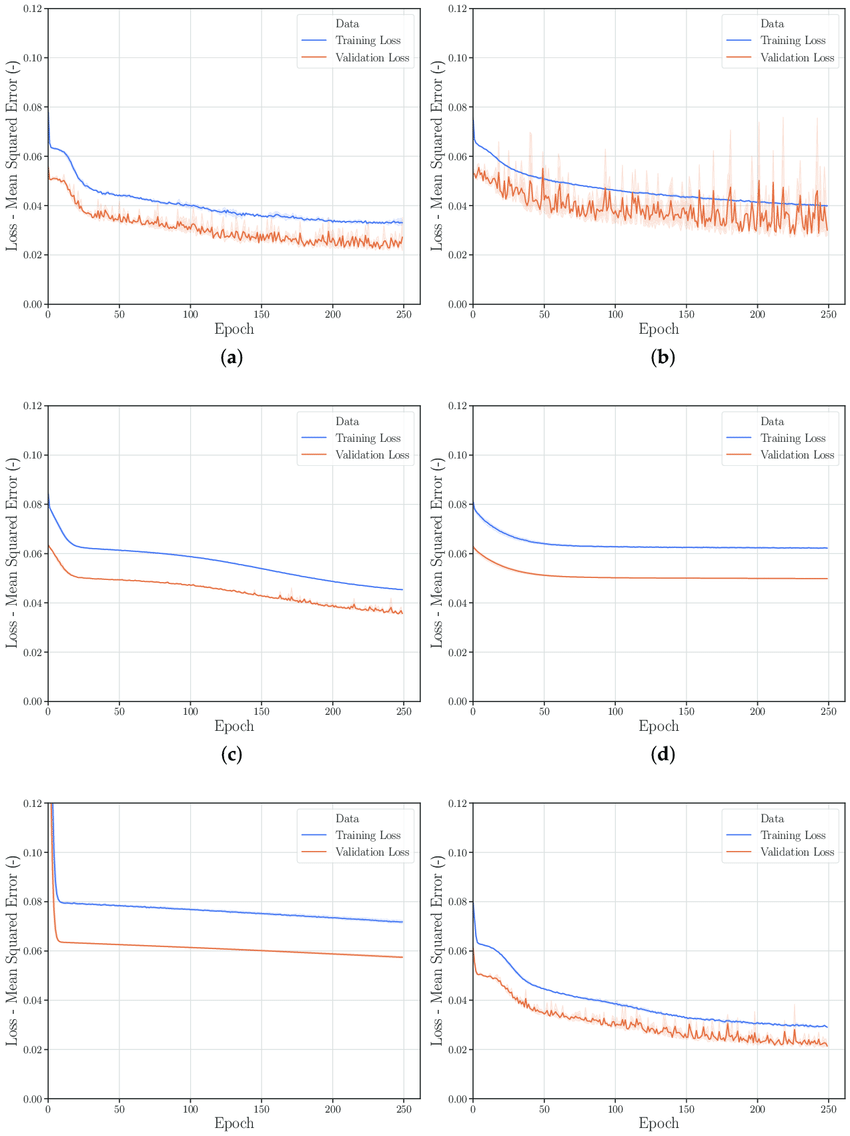

Figure A1. Learning curves with optimizer (a) Adam and (b) Rmsprop, (c)... | Download Scientific Diagram

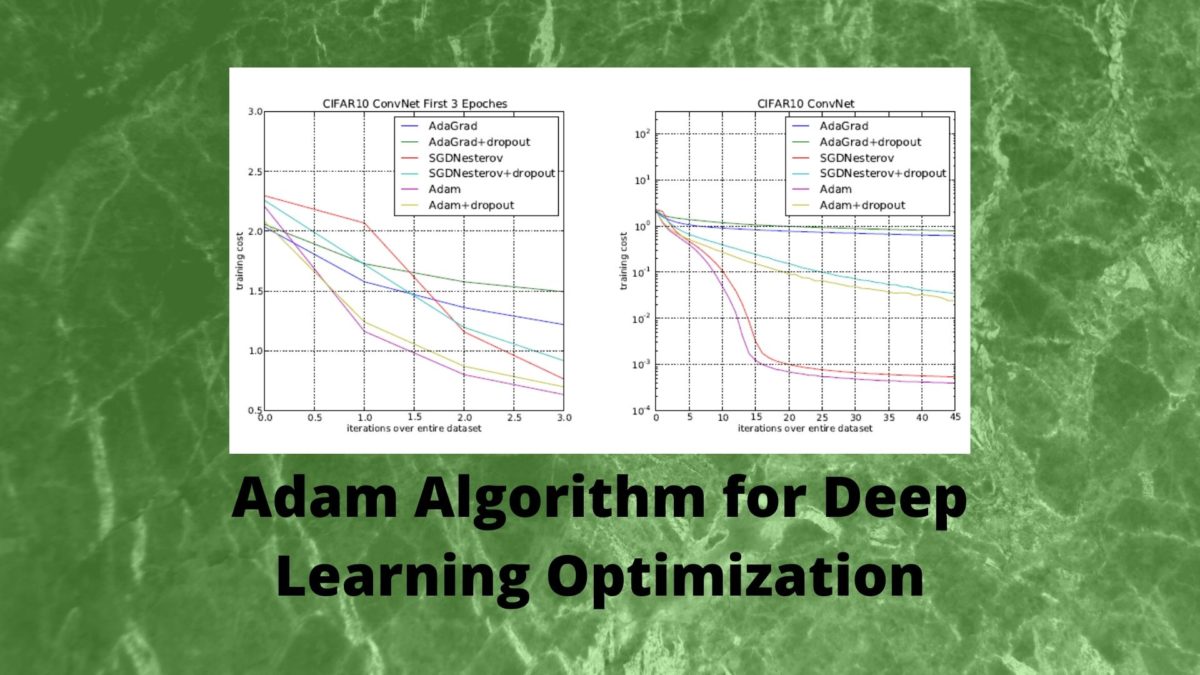

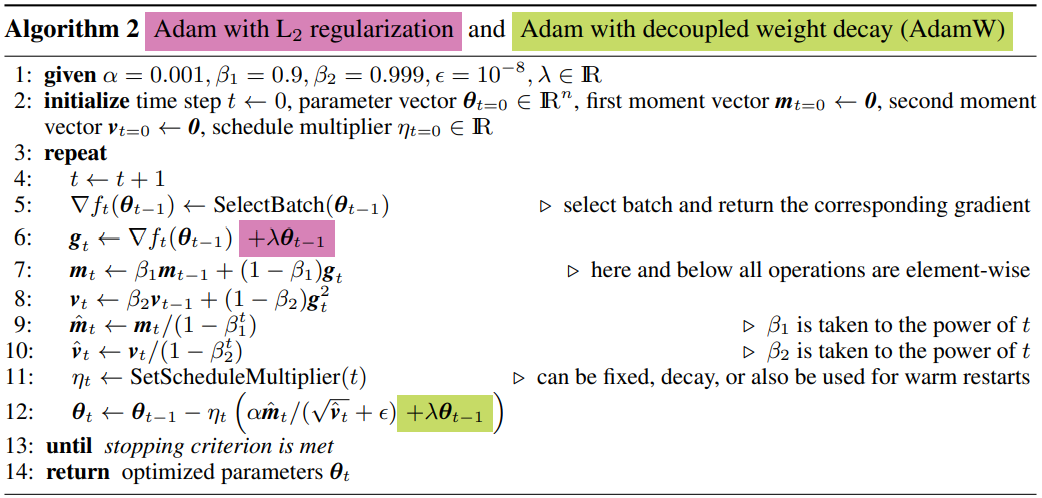

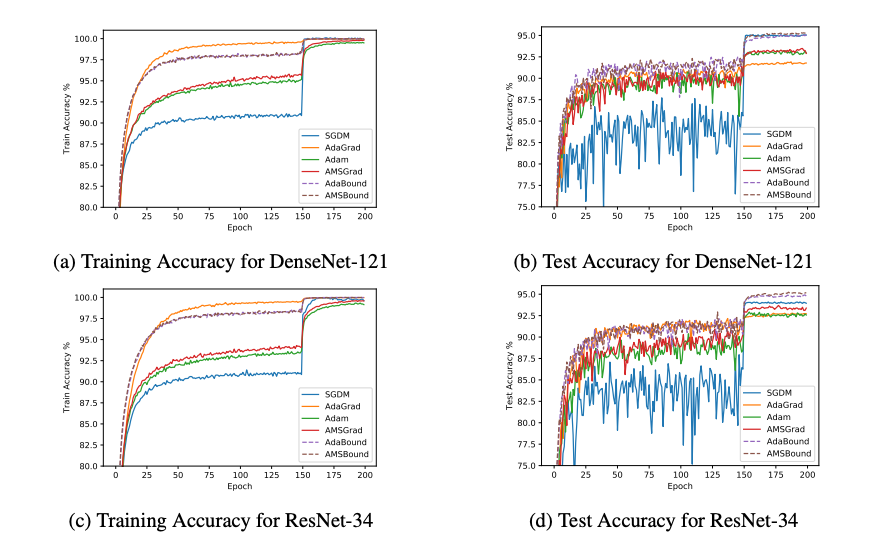

Applied Sciences | Free Full-Text | On the Relative Impact of Optimizers on Convolutional Neural Networks with Varying Depth and Width for Image Classification

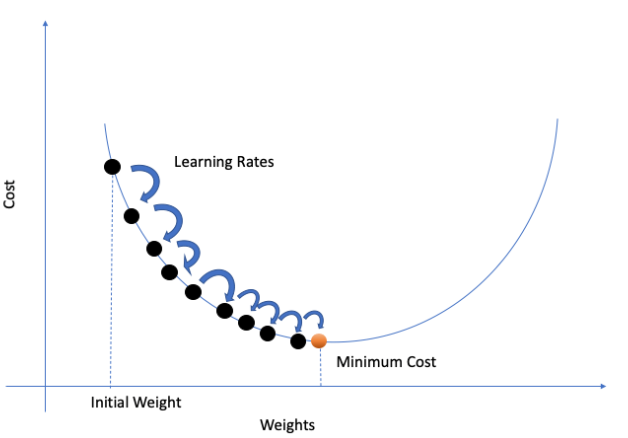

Adam is an effective gradient descent algorithm for ODEs. a Using a... | Download Scientific Diagram